Decoding the Black Box: Demystifying Fraud Decisions with Explainable AI

Highlights:

-

Boost Manual Review Efficiency: Explainable AI for Omniscore provides clear, factor-by-factor risk assessments, enabling users to quickly identify key risk drivers, improve accuracy, and significantly reduce the time and resources spent on manual investigations.

-

Strengthen Trust and Simplify Policies: By illuminating the "black box" of AI, Equifax fosters greater user trust in its fraud detection decisions, which in turn reduces the need for overly complex rules and simplifies overall system management.

Artificial intelligence (AI) has revolutionized fraud detection, offering unparalleled speed and accuracy. However, as AI models grow in complexity, a significant challenge has emerged — the "black box" problem. We feed data into these advanced algorithms, and they deliver decisions, but the reasoning behind those decisions often remains shrouded in mystery. This lack of transparency can erode trust and hinder effective decision-making in the future, particularly in the critical realm of payment fraud.

When utilizing technology to fight payments fraud, it might not always be clear why a particular decision was made and what factors may have influenced the outcome. This ambiguity creates friction, leading to time-consuming manual reviews and management of complex policies.

The Need for Clarity: Why Explainable AI Matters

Model explainability isn't just a nice-to-have — it's a strategic imperative. For Equifax, it's about building stronger customer confidence and trust. By leading with AI that is explainable, we empower users to make more informed decisions, ultimately driving greater value through enhanced understanding and trust.

Introducing Explainable AI for Omniscore

To address this critical need, Equifax has introduced a

powerful new feature for Payments Fraud designed to shed light on

the inner workings of the Omniscore. This feature provides increased

transparency, allowing users to understand what factors contributed to

a transaction's risk assessment.

This new functionality is broken into four key areas:

-

Expansion Action in the Omniscore Widget: This allows users to quickly access a detailed breakdown of the factors influencing the Omniscore directly from the transaction details view.

-

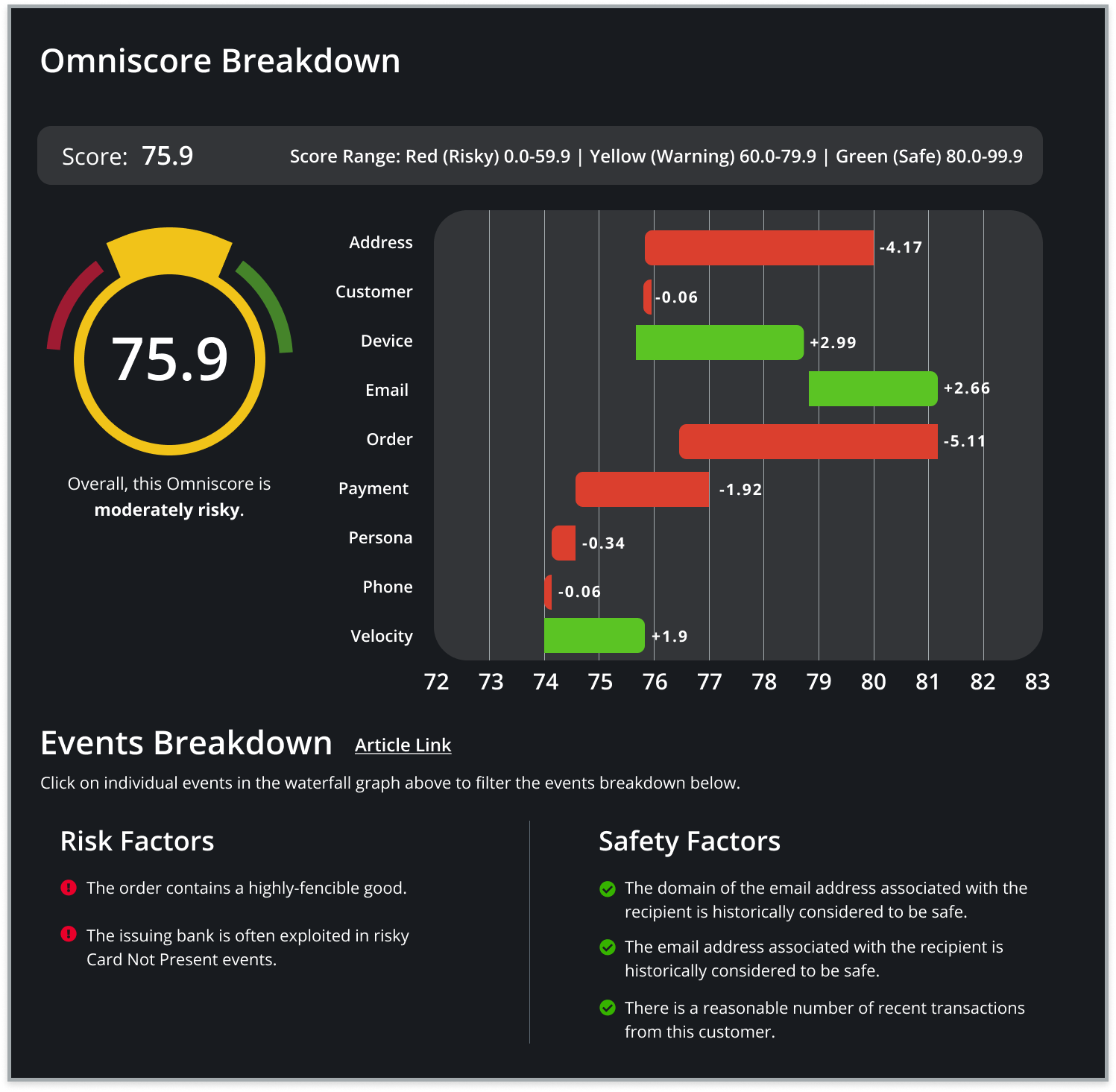

Omniscore Explainability Display: A dedicated modal provides a comprehensive view of the Omniscore's calculation, offering a clear and concise explanation of the contributing factors.

-

Waterfall Chart: This visual representation breaks down the Omniscore into its constituent parts, showing the positive and negative influences of each factor. This allows users to easily identify the most significant drivers of risk and safety.

-

Risk and Safety Factors: This feature clearly delineates risk factors (those that decrease the Omniscore) and safety factors (those that increase it), providing a nuanced, yet comprehensible, understanding of the transaction's profile.

Benefits of Enhanced Explainability

The benefits of this new feature are significant:

-

Provides Insights to Manual Reviews: By providing clear explanations of key risk factors, users now have additional data points to use during investigation.

-

Improves Accuracy and Reduces Time Spent on Reviews: Users can quickly identify the key risk factors, reducing the need for extensive investigations, saving valuable time and resources.

-

Reduces Complexity in Policies: The increased transparency reduces the need for overly complex rules. With an increased trust and adoption of the Omniscore, users simplify the management of the system.

How Do We Do It?

With an innovative combination of machine learning, game theory, and a patent pending method for sub-millisecond inference over our vast network of fraud-related data points and variables, we can find the real impact of features that translates signals from our fraud network into an on-demand explanation for every transaction.

Empowering Users with Transparency

With this new feature, Equifax is moving beyond simply providing results, empowering users with the knowledge and understanding they need to make confident decisions. By illuminating the "black box" of AI, we're fostering trust, driving efficiency, and ultimately, creating a safer and more secure payment environment.

Recommended for you